(This post is more or lessa narrative from my presentation at the 2007 Washington D.C. eMetrics / Marketing Optimization Summit)

“You know that campaign with the best response rate ever, the one with $5 million in sales? We lost over $1 million dollars on it, according to Finance. Something about the difference between Measuring Campaigns and Measuring Customers.”

– Me, giving my boss at HSN a piece of good news, 1991

That, my friends, was the first time I found out just how important control groups are to measuring the success of customer campaigns in an interactive, always on environment.

The Finance department – through the Business Intelligence unit – was measuring the net profitability of the campaign at the customer level. We (Marketing) were measuring the net profitability at the campaign level – based on response to the campaign. The difference was close to $3 million dollars – from a $1.9 million profit using Marketing’s campaign measurement to a nearly $1 million loss using Finance / BI’s customer measurement.

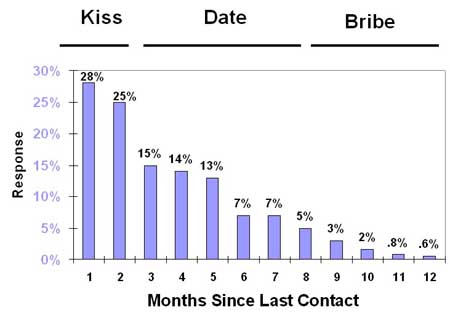

The crux of this difference is always on, self-service demand, or what Kevin calls Organic Demand. The only way to measure these customer demand effects accurately – and so the true profitability of campaigns- is with control groups. Online, this issue is primarily relevant to e-mail marketers (customer marketing) but comes into play in lots of different ways – especially so if you have PPC or display advertising taking credit for generating sales from existing customers.

Seems like there is a lot of confusion around what control groups are and why you should care about them, and I’m hoping this post helps to clear some of that up! But before I lose you in the details, here is why you should care about this topic:

1. Tactically: First and foremost,if you’re not using control groups, you are most likely chronically underestimating the sales / visits / whatever KPI you generate. “Response” is almost always lower than actual demand, because your campaigns generate sales / vists / whatever KPI you cannot track through campaign response mechanisms. Is full credit for what you contribute to the bottom line important to you? If so, stick around and read the rest of the post.

2.Strategically: In a multi-platform, multi-channel, multi-source world, control groups are the gold standard in customer campaign measurement. You will eventually be required to have a common success measurement that can be used for any situation, as opposed to success measurements “customized” for the quirks of every marketing situation that develops.

If you are not using controls, then your campaign results are always suspect. The fact nobody has asked you yet to prove the sales you claim to generate are actually generated by your campaigns is not an excuse; that day will come. Will you be ready? When “prove it” is on the table, the folks using control groups win over those who are not using them every time.

3.Culturally: The concept of “variance reporting” fundamental to the control group idea is very well understood by senior management. In fact, despite sounding complex, the control group idea is absolutely the easiest to explain to management and generates a tremendous level of confidence in what you are doing.

This is why confidence in controlled results is so high: there are no “caveats” and no need for specialized understanding from management of different channels or technologies. No explanations required for technological causes of error – why does this system say sales were this and this other system say sales were that? No doubts about the source of the ROI, no questions about external effects. Clean and simple, elegant in execution.

Interested? OK, here we go. Here is the idea in a nutshell.

Let’s talk a little about the idea of “incremental”, as in incremental sales or visits. Incremental means “extra beyond normal” or what is often called “lift” in the database marketing / BI world. The central issue is this: if I spend money on a campaign, I want the campaign to generate incremental sales beyond what I would get if I did not do the campaign. That’s logical, right? Why else spend the money, if the campaign is not going to lift my sales over and above what they would have been without the campaign?

In offline retail, Wall Street is always after one KPI – called the “comps”, short for “same store sales comparisons”. What they want to know is for stores open at least a year, what were the sales this quarter versus same quarter last year? That growth, or lift, is what determines how well the company is doing. The reason is simple: if they just look at gross demand, it can be inflated by opening new stores. These new store openings mask the true productivity of the operation, and Wall Street knows productivity is what drives profit growth in retail. So they want to know the incremental sales versus last year of a finite set of stores open at least a year – not the sales of all stores. In using this approach, they are controlling for the new store openings – removing the influence of them.

And that’s exactly what control groups are for – to remove the influence of any number of factors, and arrive at the true driver of the incremental change.

When testing the effectiveness of drugs, one of the control groups is often the placebo – the people who take a sugar pill instead of the real drug. This is done because of the placebo effect – the tendency of a person to feel better when they are taking a drug. Why is this done? Because the testers want to measure the real contribution of the drug – the incremental effects over and above the placebo effect.

OK? So here is how it works in customer marketing:

1. Choose a population to target with a campaign

2. Take out a random sample of that population to use as control – the “control group”. The remaining members of the population after the sample is taken out are called the “test group”.

3. Send the campaign to the test group, and do nothing to the control group. Measure the performance of the test versus control over time, and calculate the incremental impact on the test group of receiving the campaign.

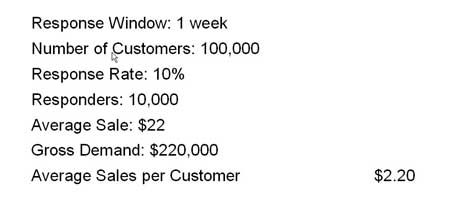

A typical email campaign to best customers might look something like this. Let’s say the campaign has an end date of 1 week after the drop; the customer has to react within a week to take advantage of the offer:

Respectable results for a best customer target – you do segment best customers out for different treatment, don’t you?

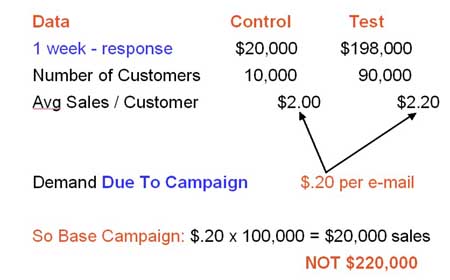

Here is what the same campaign probably looks like using a control group, after one week of response:

Note that 10% of targets were taken out as control; the remaining 90,000 received the campaign.

If this campaign had dropped to the entire population of 100,000, the campaign that generated $220,000 in sales really generated only $20,000 in sales, because the incremental sales impact of the campaign was only $20,000 ($.20 per e-mail) versus the control group who received no campaign. The other $200,000 would have been generated by this customer segment without the campaign. Follow?

Now at this point, you’re probably saying, “Hey Jim, I get it and all that but there’s no fricking way I’m going to implement this at my current job, I mean, I can’t take a hit like that in performance!”

To which I would say:

1. Don’t use controls until you change jobs – you’ll look like a major scientific testing hero at your next job!

2. You don’t have all the data to make this call yet…we need to talk about what I call “halo effects”.

Halo effects are generally the unintended actions taken by the targets of the campaign. At a basic level, it’s sales generated because of the campaign that you can’t track back to the campaignusing a “campaign response” methodology.

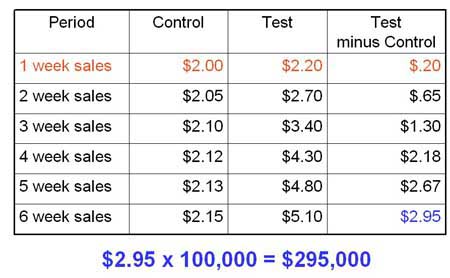

Here’s what this campaign looks like after 6 weeks, when probably almost all the the halo effects would be included. The numbers for each week are cumulative, they include the sales from the prior weeks:

Now that’s more like it! If this campaign dropped to the entire population (including the control), it would have generated $295,000.

In this case, there were $75,000 in sales over and above what a “response” measurement of $220,000 shows. These sales are coming primarily from people who did not respond to the campaign in a way you could track, but did respond to the campaign.

We’ll dive deeper into explaining how and why this happens, plus address some of the execution and cultural aspects of using control groups in the next post.

Until then, Questions, Comments, Clarifications?