As I said in the Heavy Lifting post, I think the Web Analytics community is becoming increasingly insular and should be paying more attention to what is going on outside the echo chamber in Marketing Measurement. I also think the next major leaps forward in #wa are likely to come from examining best practices in other areas of Marketing Measurement and figuring out how they apply to the web.

For example, did you even know there is a peer-reviewed journal called Marketing Science, which calls itself “the premier journal focusing on empirical and theoretical quantitative research in marketing”?

Whoa, say what?

This journal is published by the Institute for Operations Research and the Management Sciences, and articles are the work of premiere researchers in visitor and customer behavior from the best known institutions around the world. In case you didn’t know, “peer-reviewed” means a bunch of these researchers (not including the authors, of course) have to agree that what you say in your article is logical based on the data, and that any testing you carried out adhered to the most stringent protocols – sampling, stats, test construction, all of it.

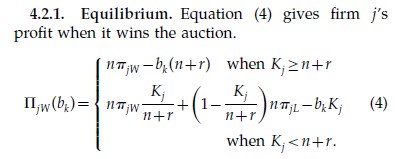

And, most mind-blowing of all, they show you the actual math right in the article – the data, variables, formulas, graphs – that lead to the conclusions they formulate in the studies. You know, like this:

So, the opinions coming from Marketing Science are probably a lot more reliable than say, the average blogger in the echo chamber. Know what I’m saying? And here’s a surprise, the findings in these articles often contradict what is passed off in the blogosphere as “common knowledge” by the digerati.

In case you are thinking, “Well, these lab coats can’t possibly be exploring anything that would be interesting to me“, take a look at some article titles in the Mar – Apr 2009 edition of Marketing Science:

* Website Morphing

* Real-Time Evaluation of E-mail Campaign Performance

* Optimal Bundling Strategies in Multiobject Auctions of Complements or Substitutes

* Path Data in Marketing: An Integrative Framework and Prospectus for Model Building

* Click Fraud

Or article titles for the Jan – Feb 2009 edition of Marketing Science:

* Quantifying the Long-Term Impact of Negative Word of Mouth on Cash Flows and Stock Price

* Going Where the Ad Leads You: On High Advertised Prices and Searching Where to Buy

* Content vs. Advertising: The Impact of Competition on Media Firm Strategy

* My Mobile Music: An Adaptive Personalization System for Digital Audio Players

Tell me you want to put more faith in your RSS feed than in what these folks have to say in Marketing Science. What blogger shows you the math? What Research for Press Release piece passed around the echo chamber shows you the survey questions, the sample distributions, the confidence intervals?

But wait, there’s more.

The Research Committee at the WAA has been working hard on a program to open up some of these influential resources to WAA members. You can see the beginning of those efforts here. Expect more in the future as the effort ramps up. The knowledge sources currently in the hopper are these:

- Journal of Advertising

- Journal of Advertising Research

- Marketing Science

- Journal of Marketing

- Journal of Marketing Research

Now, you don’t have to agree with what folks who publish in Journals like these have to say, but as an analyst, I sure want to know what they think, see how they arrived at those thoughts. And gut-check my own reality against them.

Because the analyst’s mission is truth seeking, finding better truths.

Can you afford to ignore some of the most respected Marketing researchers in the world when formulating your hypothesis?

I see my alma mater library has this journal in print. Figure I’ll give it a gander when I drive by there later this week. Thanks!

The great thing about the Journal format is there’s something for both the brains. If you dig the math, there’s always math to spare.

If you are on the marketing side, there is enough summary information and structure around the agrument that you can understand the conclusion without going through the math in detail.

Me, I’m in the latter category – I get the general idea of the equations, but I won’t be running them with data sets any time soon!

Hi Jim,

Thanks for exposing us to that publication. I think we are now indeed ready as a field to bring in more of what makes a body of knowledge a real “body of knwoledge“! It will certainly not hurt us to incorporate some basic scientific methodology to what we do!

Jim, a good post and as an ex-academic, I could not agree with you more.

When I was in the academics, I used to wonder why there is not much cross-pollination between the academia and the industry (except at the Harvard/MIT level). For the post decade, I have been an industry practioner and I still wonder why that is the case :-). Having had the unique opportunity of being on both sides (doing research and also applying it), I am in total agreement with you that both sides can benefit immensely if only they are a bit more open to taking the other’s output.

I can debate the pros and cons on this subject for it to be another post by itself :-). Again, a timely post.

Ned, thanks for the comment.

I have talked about this “cross-pollination” issue at length with others having academic backgrounds and of course I have my own experience with academia developing the WAA courses.

I think one of the issues is the “lack of standards” that ironically flourish when so much of the discussion takes place among folks that lack any kind of formal background or experience.

When you think about the number of “can’t lose” concepts that have been embraced on the web only to fail later, and of a culture that allows people to never admit they were completely wrong about these concepts, you get a landscape that in completely foreign to academia.

Not only must academics be “right”, they have to accept responsibility and consequences for being wrong. Not so in the blogosphere.

The result is academia generally trails practioners in the number of concepts they are willing to embrace – particularly when similar concepts have repeatedly failed – as a tradeoff for being “right”.

After all, nobody wants to pay the big money to go to college and learn something that is proven to be completely wrong a year later.

At the opposite end of the scale, when “getting paid to view a web site” fails as a business model, you would think practioners might realize that “getting paid to click on links” and “getting paid to Search” will also fail. Yet practioners are willing to repeat those same mistakes over and over because they don’t understand these concepts are all fundamentally broken in the same way (audience quality), something an academic would see from a mile away after it failed the first time.

Might be nice to meet in the middle, don’t you think?

Jim, agree with you about meeting in the middle.

One of the problems from the academic side is that while peer-reviewing increases robustness and quality of what is published, it also increases the cycle-time from ideation to the time when the article gets published. Of course, there are journals like HBR and SMR that is geared towards practioners but I have been pushing with my academic colleagues that they should find a ‘middle-path’ even for other top journals so that good ideas can be diffused while it still matters (in this fast changing world, what is good today might be useless two years from now). Reducing this cycle-time will add more value from a practioner perspective as more artcles will have immediate “applciable and actionable” value instead of just being another framework or model.

On the flip side of things, as you point out- practioners can learn from academia about putting a bit more rigor and rationale into a concept before ‘launching’ it. In academia, rarely does something gets published without good empirical evidence or other proof supporting it. Now I am not saying that we should run and do a research on every idea that comes up — but it amazes me the times we actually neglect empirical evidence (voc, customer research, service complaints etc.) and still doggedly pursue an idea just because we came up with it.

Anyway, as you said it — we got to collaborate more. Having been through the research grindmill before becoming a ‘practitioner’, it was easy for me to show to my peers the number of cases where they could “innovate” by borrowing from academia — from simple ‘how to create a control sample’ to frameworks for Cust Sat.

I see my alma mater library has this journal in print. Figure I’ll give it a gander when I drive by there later this week. Thanks!